The Death of Software 2.0 (A Better Analogy!)

The age of PDF is over. The time of markdown has begun. Why Memory Hierarchies are the best analogy for how software must change. And why Software it's unlikely to command the most value.

When I last wrote about software, I received significant pushback. Today, I believe that Claude Code is confirming the original case I had all along. Software is going to become an output of hardware and an extension of current hardware designs. With this in mind, I want to write today about how I see software changing from here.

But let’s start with one core conviction. Claude Code is the glimpse of the future. Assuming it improves, has harnesses, and can continue to scale large context windows and only become marginally more intelligent, I believe this is enough to really take us to the next state of AI. I cannot stress enough that Claude Code is the ChatGPT moment repeated. You must try it to understand.

One day, the successor to Claude Code will make a superhuman interface available to everyone. And if Tokens were TCP/IP, Claude Code is the first genuine website built in the age of AI. And this is going to hurt a large part of the software industry.

Software (Especially Seat Based) is in for a Much Rougher Ride

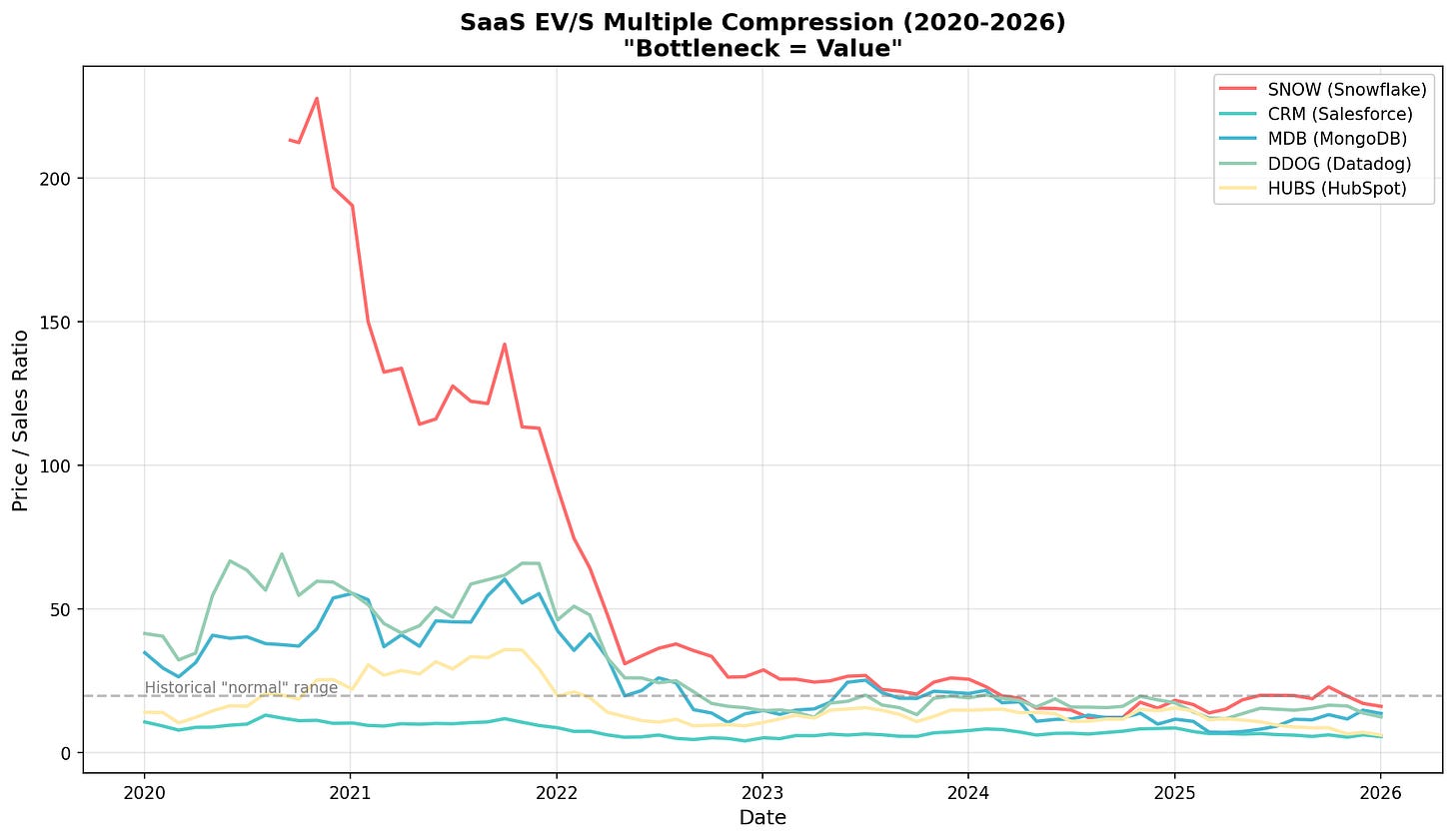

The environment may be rough at OpenAI, but at a traditional SaaS company, there is likely no greater whiplash than SaaS is eating the world in 2012 to Saas is screwed today. The stocks reflect it; multiple compression in the companies has been painful and will persist.

This is structural. I believe it’s time to rethink software’s value proposition, and I have what I consider the best analogy for what the future holds. Afterwards, we will digest what Software will look like as an extension of computing, because I believe that Claude Code resembles the memory hierarchy in computing. Let’s explain.

The New Model of Software

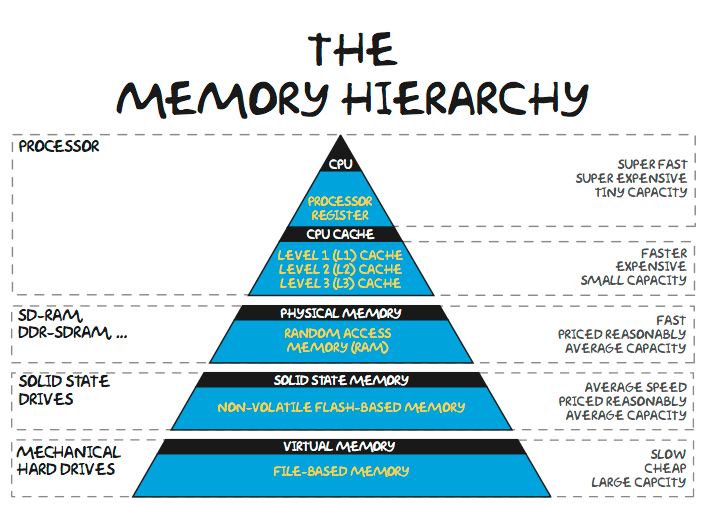

Claude Code (and subsequent innovations) clearly will change a lot about software, but the typical (and right) pushback is that you cannot use “non-deterministic software” for defined business practices. However, there is a persistent design pattern in hardware that addresses this difference: the memory hierarchy. No one can rely on anything in a computer's non-persistent memory, yet it is one of the most valuable components of the entire stack.

For those unfamiliar with computer science, there is a memory hierarchy that trades capacity and persistence for speed, and the system works because there are handoffs between levels. In the traditional stack, SRAM sits at the top; overflow is to DRAM, which is non-persistent (if you turn it off, it goes away), and then to NAND, which is persistent (if you turn it off, it persists).

I don’t think it’s worth matching the hierarchy too closely, but I believe that Claude Code and Agent Next will be the non-persistent memory stack in the compute stack. Claude Code is DRAM.

I believe that AI and software will be an extension of this, and we can already identify which layers correspond to which. The “CPU” in the hierarchy comprises raw information, and the fast memory in the hierarchy corresponds to the context window. This level of context is very fast information, not persistent, and gets cleared systematically. The output of work performed in non-persistent memory is passed to the NAND, which is stored for the long term.

Now that the code is merely an output of hardware, I believe this analogy applies.

AI Agents and their context windows are going to be the new “fast memory”, and I believe that infrastructure software is going to look a lot closer to persistent memory. It will have high value, structured output, and will be accessed and transformed at a much slower rate. I believe the way to think of software, and the “software of the future,” looks a lot more like NAND, and that is persistent, accurate, and information that needs to be stored. In software parlance, it will be the “single source of truth” that AI agents will interact with and manipulate information from.

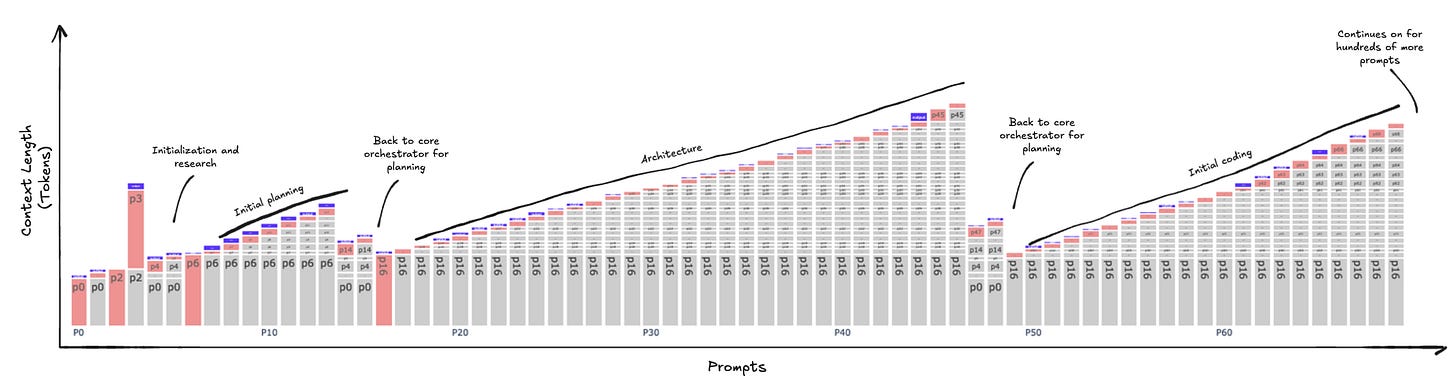

If you’re so visually inclined, here’s a diagram of a Claude code context window being compacted over and over. Another way to think about this is that it is an identity for a compute cycle; once the task is finished, it is transferred to slower memory and continues.

Each time an AI agent's computation cycle occurs, this is a scratchpad. Each context window is a clock cycle: cached state accumulates until the cache is flushed, after which information is processed. Afterward, the entire context is discarded, leaving only the output. Computation is ephemeral, and information processing by a higher tier of computation largely abstracts away most of human reasoning.

Importantly, I think there is a world in which software doesn’t go away, but its role must change. In this analogy, data, state, and APIs will be persistent storage, akin to NAND, whereas human-oriented consumption software will likely become obsolete. All horizontal software companies oriented at human-based consumption are obsolete. The entire model will be focused on fast information processors (AI Agents), using tokens to transform them and depositing the answers back into memory. Software itself must change to support this core mechanism, as the compute engine at the top of the hierarchy is primarily nonhuman, namely an AI agent.

I believe that next-generation software companies must completely shift their business models to prepare for an AI-driven future of consumption; otherwise, they will be left behind.

Glimpses of the Future

So what does this future look like? I believe that all software must leave information work as soon as possible. I believe that the future role of software will not have much “information processing”, i.e., analysis. Claude Code or Agent-Next will be doing the information synthesis, the GUI, and the workflow. That will be ephemeral and generated for the use at hand. Anyone should be able to access the information they want in the format they want and reference the underlying data.

What I’m trying to say is that the traditional differentiation metrics will change. Faster workflows, better UIs, and smoother integrations will all become worthless, while persistent information, a la an API, will become extremely valuable. Software and infrastructure software will become the “NAND” portion of the memory hierarchy.

And since I’m going to be heavily relying on the history of memory, the last time a new competitive technology came out, it was an extinction event for the magnetic cores that DRAM replaced, and I think this is probably going to be the case for UI companies or companies like Tableau or other visualization software. Zapier / Make as connectors, UiPath, or RPA companies, etc. These are all facing an extinction-level event.

Other companies that I think could be significantly affected include Notion and Airtable. Monday, Asana, and Smartsheet are merely UIs for tasks; why should they exist? Figma could be significantly disrupted if UIs, as a concept humans create for other humans, were to disappear.

Companies that are interesting are “sources of truth,” but many of them need to change. An example might even be Salesforce, a SaaS company. I don’t think the UI is that great, and most of the custom projects are just hardening workflows in the CRM. For Salesforce to make the leap, it needs to focus its product on being consumed by an AI agent, with manipulation and maintenance, while being the best possible NAND in this stack. The problem is that Salesforce will want to try to go up the stack, and by doing so, maybe miss the shift completely.

Most SaaS companies today need to shift their business models to more closely resemble API-based models to align with the memory hierarchy of the future of software. Data’s safekeeping and longer-term storage are largely the role of software companies now, and they must learn to look much more like infrastructure software to be consumed by AI Agents. I believe that is what’s next.

This raises the question: what does this look like for the industry as a whole in the near future? I believe the next 3-5 years will be a catastrophic sea change.