AI Industry Structure and Business Model: What Inning is It?

If LLMs are the next internet, it's early. Also a detailed competitive analysis between hyperscalers and Nvidia.

What inning is AI in? If history is a guide, it’s hard to predict the twists and turns of a new market growing from nothing. Here’s my humble attempt at estimating AI penetration and my thoughts on the run-rate business of AI models and industry structure.

Internet Adoption vs. AI

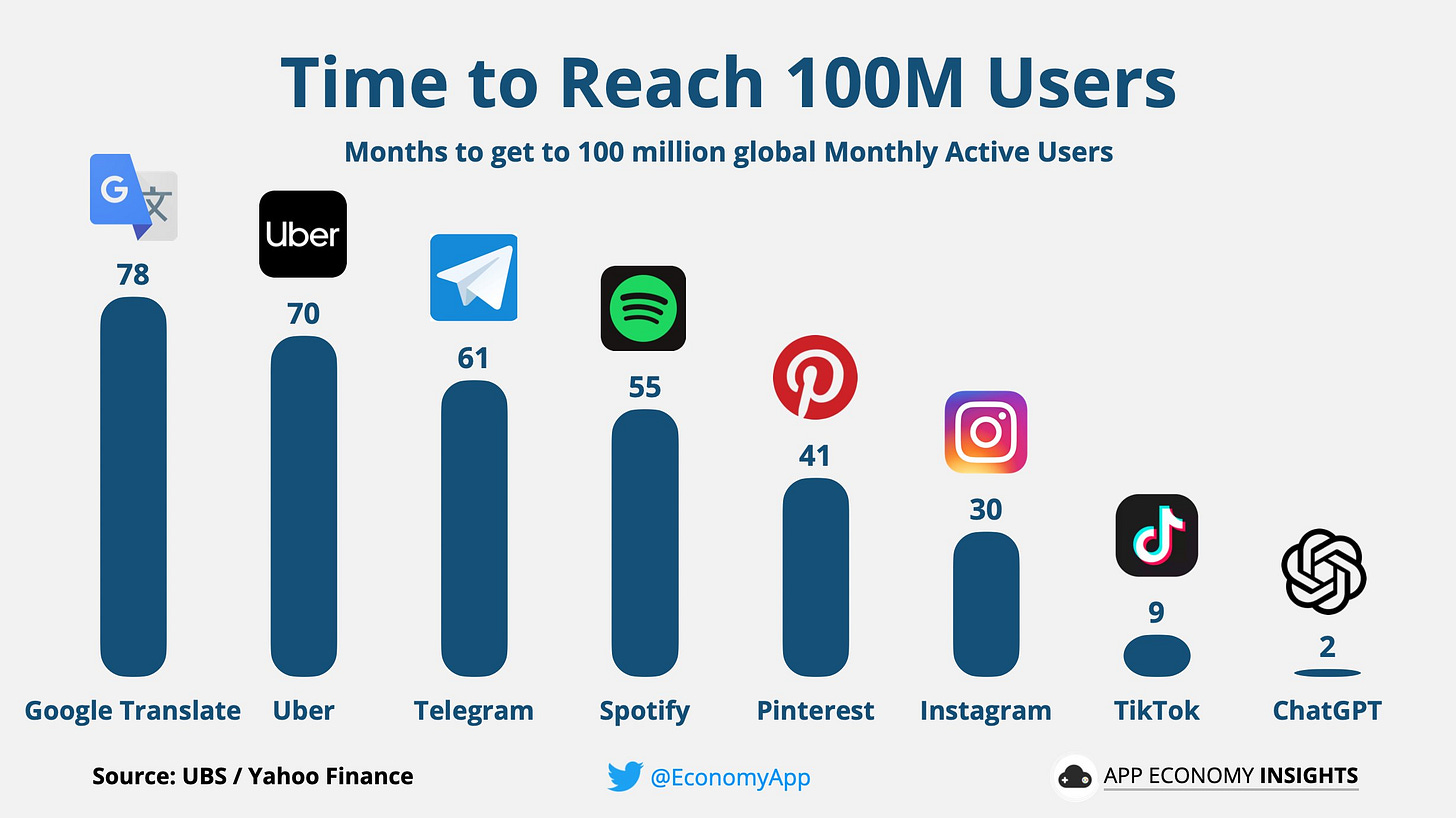

The internet remains the best case study to anticipate the implications of Generative AI and its ripple effects. Some people tend to compare ChatGPT to the time it took to gain 100 million users, but I believe this comparison falls flat.

I think that, like the internet, all of us will pay (at some minimum) a subscription to access and consistently use AI. So, instead of comparing the months to 100 million free users, I want to compare paying internet users to Generative AI adoption today.

Luckily for us, the adoption of the internet is a relatively easy metric to access. The Internet World Stats website is a great place to start and where I’ll start my analysis.

In the beginning, internet users had to pay to use the internet. In contrast, ChatGPT users can access 3.5 for free, attracting more users but making paying comparisons hard. The better comparison is between paying internet users and paying GPT users.

Here’s a graph of the internet adoption rate regarding the total world population.

It took 27 years to go from 0% to ~70% global penetration. If we take the estimates of paid users today, we can approximate a penetration rate for paying users of ChatGPT or LLM services. I will create a bottom-up estimate of paying users using Copilot and OpenAI estimates. Then, I’ll try to parse what the profitability of just those two units would look like and compare it to top-down penetration estimates.

Estimating LLM Penetration Today

Let’s start with what we know. The largest user base of paying LLM users is ChatGPT and OpenAI. Let’s use the Information's recent revenue estimates of $1.6 billion in 2023 and $5 billion in 2024. While API revenue is associated with that, we can estimate that it means about ~6 million users in 2023 and ~21 million users next year.

The next big piece of users that are paying is Microsoft. First, the baseline of revenue will be a conversion rate from E3 and E5 users, which approximates ~160 million users today. I assume 10% unit growth and then use the penetration growth rate of Office 365. That’s approximately 5 to 33% in a few years.

Last, I’m throwing a catchall market share assumption for niche services like Anthropic, Perplexity, and Google’s eventual offering. Each year, that number grows, and that’s a plug value for more user growth. Now, we can compare that to the world population, and frankly, in a world where everyone uses LLMs, we are extremely early in the adoption curve. If we were to compare it to the internet, we are in the very early days.

I truly think the internet could become a great model of what AI will be. If we assume it takes another 30 years to reach 70% adoption, it does appear that we are very early in the adoption curve of LLMs and AI. What’s more interesting is I can take our estimates and attempt to triangulate an eventual business model for AI.

We know the users of both OpenAI and Microsoft and that Microsoft is the primary investor in capex for both companies. Using what we know, I will go back into what AI business models might look like on a more run-rate basis. Let’s take the bottom-up estimate and compare it to what the implied economics look like.

Industry Value Map

Let’s start with the capex from Microsoft. I assume that just the incremental capex from Microsoft is the beginning of the asset base that supports OpenAI and Copilot.

I capitalize these assets and then use that as a CoG’s margin. I then assume another 30% of revenue is spent on Opex. We can see glimpses of the business model using the OpenAI information estimate and the Microsoft Copilot attached assumptions—a friendly reminder: this includes both the infrastructure and model business models.

What I find staggering is that despite the intense cost, if this is all the capex needed to support OAI and Copilot, the run-rate of gross margins will be quite good. It should be lower than traditional software. I assume what gross margins and capex will look like for each of the three big subsegments in the AI industry.

Unsurprisingly, the most economic value in this business model so far has accrued directly to the semiconductor businesses. This mature business model has an incremental driver, not a rethinking of the entire space.

Next is Infrastructure service players, and my above industry model assumes the full margin of infrastructure and AI model service players. Today, the CPU-driven hyperscaler business is well understood, with 30% operating margins from the mature AWS business. But the future of GPU clouds is less certain, specifically how the GPU or Accelerators share value between Infrastructure and AI models. Today, there aren’t enough margins to support both well, and it’s implied AI models will be low-margin businesses.

That doesn’t make sense to me, and it’s part of why I believe that the future business model is being hashed out today. Let’s discuss what it could look like.

The Industry Landscape is Changing

The future business model of the AI industry is in flux. The place where it’s clearly changing the quickest is the middle layer (infrastructure), and it’s having a weird moment. Semiconductor solution companies (Nvidia) are coming up the stack and have become increasingly single-source suppliers. At the same time, Infrastructure players must try to secure their futures by partnering with AI model companies (Microsoft and OAI, Amazon and Anthropic, etc.).

There’s a battle at the heart of semiconductors and infrastructure, and it’s unclear who wins. We’re already seeing them compete, and I would like to give an example of how things can shake out. Let me paint two different futures, one in which each business model wins.

Let’s start with Nvidia in a blue-sky scenario. Or the GPU-Cloud future.

Semiconductor First GPU-Clouds

Today's king is Nvidia, and they are selling a specific flavor of the future. The GPU is the lever of value to take most of the rewards from the entire AI ecosystem and has clearly shown itself to be the critical piece in AI today. We are not model-poor; we are GPU-poor, and it’s likely to remain that way for some time.

Nvidia’s solution is semiconductor first, with concentric circles drawn around the core GPU business. This has made their business more defendable, specifically CUDA software and networking. In the past, I have called Nvidia’s solution a three-headed hydra of Computing, Networking, and Software.

I won't rehash all of that piece, but Nvidia, in particular, has created so much value in the AI ecosystem that they have now begun to sell data center-sized solutions. Nvidia’s reference designs for GPU clouds support the newcomers on the block, and Nvidia’s solution is so complete that if you can afford it, you might as well skip having a cloud service provider. Just buy the cloud.

CoreWeave, Sovereign Nations, and even Microsoft are snapping these configurations as anchor customers. The further availability could dilute the hyper scaler’s power.

This is not surprising. Jensen is cunning, and this has been a series of long-term aspirations to bend the arc of computing to be accelerated. We are still living in the afterimage of his past decisions, from giving CUDA away for free to buying Mellanox, hell, even for the GPU itself. Jensen has been the man looking five years out, and it’s no surprise that such amazing differentiation has given Nvidia so much of the value of the AI ecosystem.

But that’s the rub. Nvidia has expanded its moat so far outside of its original bounds that it’s starting to bump up against its customers. That’s always tough competition. The customers realize that being beholden to Nvidia in this way is no good, so they are pursuing vertical integration.

Let’s spin the story of what an infrastructure provider version of the future would look like. That’s mostly through vertical integration.

Vertical Integration

After a certain number of units or dollars are spent on semiconductors, hyperscalers ask why not just make it themselves. If you spend 20 billion dollars on a specific product, you must ask yourself that question.

This has been happening for a long time, and Google has been the most forward-thinking of the big three hyperscalers. The problem is that their sluggishness in execution has hindered the results from showing.

Google has the lowest cost compute for AI training, bar none, and it should be a huge deterrent and competitive advantage. The reality is that their LLMs are still behind despite inventing the transformer. Despite their leadership position, multiple companies now have solutions as good as Google. The vertical solution might not work if you can’t ship solutions fast enough, and Google’s TPU could be a constraint in slowing down the organization’s ability to execute.

Regardless, it’s not just Google pursuing this path, as Microsft has also begun creating its accelerators. The world realizes how much value Nvidia captures. The vectors of Nvidia’s competition are no secret; it’s just how to out-execute them. Microsoft has the advantage of being close to the leader today, giving them the knowledge to cut off Nvidia someday, but that will take some time.

The reality is that both scenarios could easily be the future. Worlds are colliding, and some aspects of the stack can be slowly eroded. Semiconductors are moving up while Infrastructure is moving down. The winner takes the market. Below is a simplified graphic.

AI Industry Win Conditions

Let’s now explain how each would win behind the paywall.