Fabricated Knowledge Q2 2024 Quarterly Review

Here's everything that happened this quarter, and some thoughts and ideas.

This is my quarterly piece, where I round up all of my favorite ideas (more explicitly) and explain some semiconductor jargon. This is a meta piece of content.

Semiconductor Performance

First and foremost, I’ll start with performance. SMH returned 14% this quarter, among the best quarters in history for the semiconductor universe (again). This quarter's leader was AOSL, who is rumored to have won a socket at Nvidia.

Thematically, let’s talk about the biggest losers and winners, and this quarter, it looks like it’s automotive again. WOLF, INDI, MBLY, STM, and ON are near the bottom, and that’s because of the continued pessimism. Last quarter, I wrote quite a bit about this in “Pump the Brakes,” but I think “trade” is likely done. It’s time to look to the other side of the cycle, which means things are better ahead, not worse.

To me, the areas where I find the most bullish where stocks have not reacted are Consumer and networking, and not surprisingly, two of my ideas (behind the paywall) discuss networking in particular.

I recently also wrote about why I’m a bullish consumer and am starting to become cautious about AI. I don’t think it’s over; it's just that a breather is in play, and we are already seeing the quiet in Nvidia and the other AI infrastructure names.

Now, let’s walk through this quarter in the eyes of my content.

Content Overview

First and foremost, the year's best piece will likely go to the Data Center: the New Unit of Compute piece. It went viral, and I’m proud of this one. Read it if you haven’t already.

But this quarter, I had some ideas that were entirely off the beaten path. I’ll discuss this company behind the paywall and some recent thoughts.

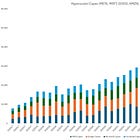

Another critical piece this quarter was the capex piece. This high-level tracker is essential to understanding the health of the AI ecosystem’s spending. From what I see, Capex guides this last quarter continue to give the all-clear for continued fundamental progression. The stocks are a different story, however.

The earnings coverage was pretty dull this quarter. I think the market continues to like more of the same. However, I found an excellent short-term idea in the Crypto Data Center NAV piece. These ideas will likely keep working.

We are now entering the seasonally slower Summer season. It’s been a good year for stocks, and I expect it to continue. Anyway, I want to discuss some of the jargon I have been seeing that needs some explaining.

Jargon Corner

In an effort to continue explaining and reducing complexity in Semiconductors, here are a few basic concepts that I’ve seen pop up that I think deserve some explanation.

Ultra Accelerator Link (UAL)—This is an open consortium’s response to NVLink, the GPU-to-GPU interconnect solution that creates an extensive coherent domain of GPUs. It’s no secret that NVLink has been a massive reason why Nvidia wins. This is basically an NVLink for Intel, AMD, and other accelerators.

The UALink initiative is designed to create an open standard for AI accelerators to communicate more efficiently. The first UALink specification, version 1.0, will enable the connection of up to 1,024 accelerators within an AI computing pod in a reliable, scalable, low-latency network. This specification allows for direct data transfers between the memory attached to accelerators, such as AMD's Instinct GPUs or specialized processors like Intel's Gaudi, enhancing performance and efficiency in AI compute.

Scalable Hierarchical Aggregation Protocol (SHArP) - This is a Mellanox (Nvidia) protocol that reduces the need for CPUs in the routing aspect of network. It optimizes global operations by performing reductions in the network, so it takes fewer CPU cycles by the time it gets to the CPU.

It's basically a special header that only works on Nvidia switches and is an example of network compute reductions. You can read more in this paper, which I found pretty intuitive.

Front End Network - Let’s talk about the fabric types in a GPU network. There’s a front-end fabric that handles TCP traffic, REST API requests, and storage traffic. This is the traditional fabric you think of in Ethernet in a CPU driven datacenter.

Back-End Network - Then there’s the back-end fabric dedicated to GPU all_reduce, collective operations, and inter-GPU conversations. This was what caused confusion at the GTC that there were fewer transceivers when, in reality, there’s a backend versus the front-end network. NVLink is an example of this backend fabric.

Lastly, let’s discuss my ideas and my idea set. Here’s what I think is interesting (or not attractive) this quarter.